How GN’s Deep Neural Networks help make sense of speech in noisy environments

There can be no denying that we live in an exciting time for hearing technology, as AI is making them more powerful, including in recognising speech. As a result, users can hear more without having to let their brain do more. This makes hearing, particularly in noisy environments, easier and reduces fatigue.

Many hearing aid manufacturers have their own solutions, and GN’s is Intelligent Focus. The firm says it can empower users by focusing on what they want to hear, enabling them to be in control and allow the brain to work to its natural strengths. By spotlighting speech over distractions, GN says this can free up mental bandwidth and let people hear better.

New research proves this to be true: lab tests run by Hörzentrum Oldenburg show that the ReSound Vivia and Beltone Envision are top-rated for speech intelligibility in noise. The data found that the hearing aids deliver measurable advantages in realistic group conversation tests, helping hearing aid users follow conversations more easily.

The company’s Chief Audiology Officer, Laurel Christensen, is delighted that the independent research has confirmed the feedback from customers who have given the ReSound Vivia and Beltone Envision top user satisfaction scores.

“We love to see those results,” she says. “It is always in our best interest to have an outside, objective location do the testing. Oldenburg is a really nice place to do this, as they have developed their own assessments.”

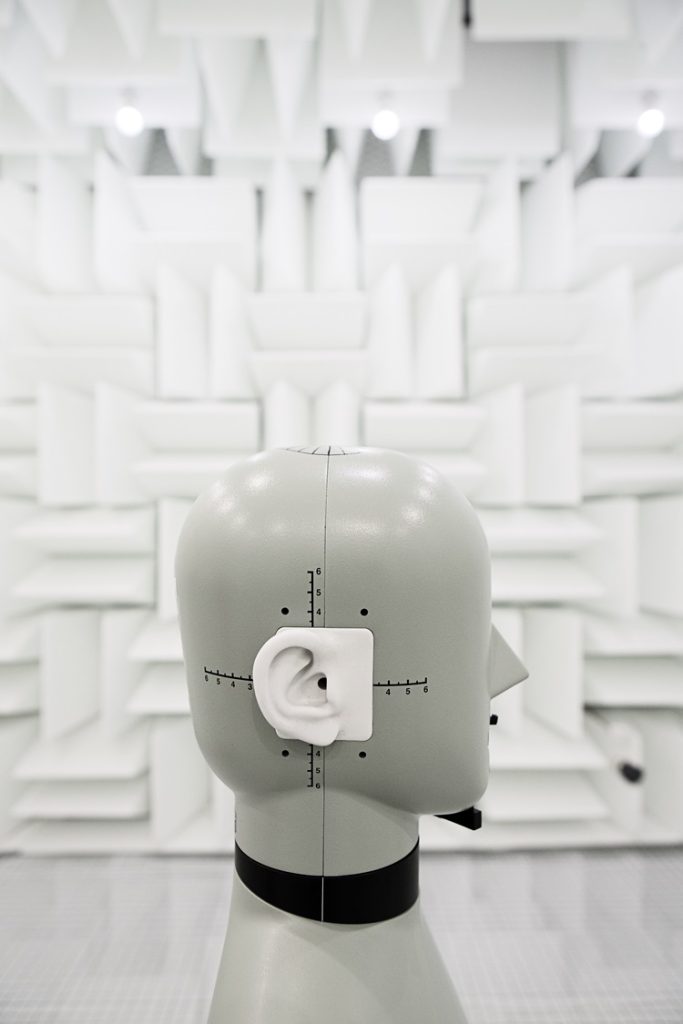

These include a tool to measure listening effort, while a second test – used in the ReSound Vivia study – used three people talking: the test explored listening first to just one of the three, and then to all three. This explores the hearing aid’s directional microphones and its Deep Neural Network (DNN), which helps with noise reduction.

“Oldenburg has found that on average, we are performing better than three other top-of-the-line hearing aids on the market today, one of which also has denoising capabilities,” Laurel says. “That is just phenomenal for us: GN doesn’t compromise, and that is important for someone to know when purchasing a hearing aid.

“If you want a small, discrete size; if you want long battery life; if you want comfort; if you want the aids to be easy to use; and if you want to hear a noise incredibly well – those are things you don’t want to compromise on.”

Laurel explains that Deep Neural Networks can take a lot of processing power and a lot of battery life, so GN’s engineers have had to work hard to make the hearing aids power-efficient while also providing the features people need to live their everyday lives.

Deep Neural Networks must be trained to distinguish all kinds of sounds, including speech, traffic, and background noises such as the babble of conversations that people will hear in cafes. Doing so is not a small undertaking, and each scenario requires massive datasets that necessitate programming.

“These are the situations that people are going to deal with, and the DNNs need to be trained on real, live conversational speech,” Laurel explains. “They did it in such a way that it was very efficient.”

At a GN staff forum, researchers shared an update on the early development of Deep Neural Network programming, and Laurel had the opportunity to find out more. She tuned in to the noise reduction programme and said it felt like she had put on the headphones she used when flying to block out the sound of the plane.

“Oh my gosh, I could still hear the speech while the noise was literally gone. That’s when I knew it was something,” he recalls.

This was long before it was in a hearing aid, but Laurel says GN realised they would have a “huge product down the line”. The work continued, getting the Deep Neural Network onto a chip and into the aid, no small undertaking given that this is a technology that is still emerging.

“Deep Neural Network is a tool that can be trained to do something that will help in the hearing aid,” Laurel explains. “It can classify the environment and make changes to the hearing aid that are best for that environment.

“When you do noise reduction, the processing power needed is immense. There isn’t a hearing aid chip out there that can do that, even if they repurpose a core. It might not always be the case, but you have to use an AI accelerator to do it today, and it has to do it in a way that is very efficient so you can power the hearing aid all day.

“What our engineers accomplished is amazing.”

The result is the ReSound Vivia and the Beltone Envision, and Laurel says GN is receiving “probably the best feedback I’ve ever seen on a hearing aid”. That includes people saying they are hearing in situations that they had previously never been able to hear in – something we can vouch for.

“I would say it’s some of the best feedback we’ve ever had, a lot of people can’t believe we were able to do what we’ve done,” she says.

Laurel says that Deep Neural Networks sees the industry start a new chapter in hearing aid development and there is a long way to go with the technology.

“What we have today is phenomenal. The denoising is taking noise out, but currently it is the directional microphones that are providing us with the most ability to hear noise. That won’t always be the case.

“Today, hearing aids can’t know what you want to listen to, and we must be very cognisant about that. Someone asked me if a hearing would ever know. There are sensors and other things on the horizon that could cue the hearing aid to hear what you want to hear: it will happen, probably in the next five to 10 years, that hearing aids will know the user’s intent.”

The hearing aids that sit behind or go in the ear are getting cleverer, more powerful, more helpful and, in many cases, smaller. As Laurel says, “there is way better to come”.

Clearly, this is an exciting time for hearing technology that will benefit millions.